TL;DR — Respond.io rebuilds its mobile app for high-volume B2C customer conversations

Eliminating app lag: Respond.io's new React Native architecture is purpose-built to handle massive messaging spikes without the UI freezes or crashes common in other mobile inboxes.

Near-perfect stability: Provides a 99.939% crash-free rate using React Native’s JSI and Fabric technologies for direct, instant execution.

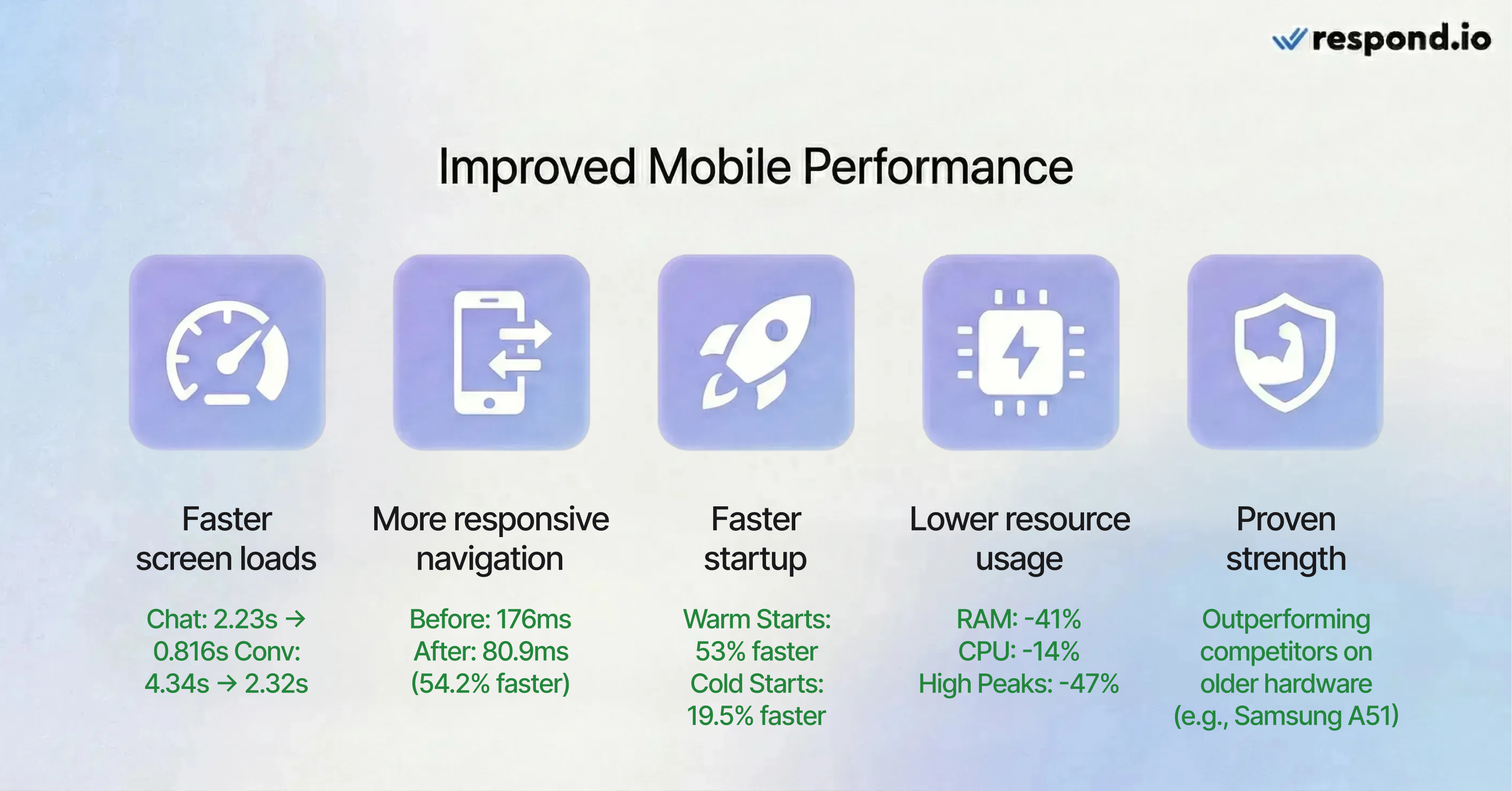

Faster agent responses: Delivers 54.2% faster screen-switching and 64% faster chat interactivity, allowing agents to manage more customers in less time.

Optimized for older devices: Reduces RAM usage by 41%, ensuring smooth performance even on hardware where competitors struggle to load media-heavy chats.

Many customer conversation platforms treat mobile apps as lightweight companions to desktop tools, which break down under multi-agent usage, heavy conversation loads and media-heavy workflows.

The respond.io app is engineered to stay fast, stable and responsive during peak seasons, enabling teams to handle conversations quickly and reliably at scale from anywhere.

How does respond.io handle high-volume chats and calls on mobile?

Respond.io handles high-volume B2C messaging through a rebuilt mobile architecture that maintains a 99.939% crash-free rate even during peak traffic spikes. By moving to a modern execution model, the app eliminates the UI freezes and latencies common in desktop-first inbox tools, ensuring agents can manage concurrent conversations and media-heavy chats without performance degradation.

In B2C businesses, mobile performance matters most during revenue-generating moments such as product launches, flash sales, seasonal campaigns, and ad-driven lead spikes. Agents rely on mobile access to respond quickly, qualify leads, and close conversations without waiting for desktop access.

To eliminate the UI freezes and lag that disrupt agents during peak traffic, we engineered our architecture to handle concurrent B2C workloads that traditional mobile inboxes cannot support:

Zero-lag messaging with React Native New Architecture, replacing the old JS–Native Bridge with direct execution paths for significantly lower latency and smoother rendering.

Media-heavy fluidity with an efficient image caching strategythat optimized decoding, storage and memory handling to reduce bandwidth usage and eliminate UI slowdowns in media-heavy chats.

Scalable architecture with code-level optimizationsthat reduce unnecessary re-renders, defer non-critical API calls, modernize core packages and remove legacy dependencies for streamlined execution.

Together, these upgrades form the complete foundation behind the app’s new performance gains.

The most stable mobile solution for B2C scale: Why legacy architecture fails (and how we fixed it)

Earlier versions of the respond.io mobile app struggled under high conversation loads, and some users experienced slowness or lag. These were legitimate issues caused by limitations in the previous mobile architecture, which is commonly used by chat apps. We purpose-built our new architecture to support high-volume, multi-agent workflows reliably.

Capability | Legacy Architecture (Standard Inboxes) | New Architecture (Respond.io Mobile App) | User and Business Impact |

Communication path | Single serialized bridge | Direct execution via JSI | Agents experience faster interactions under peak traffic, reducing delays when replying to customers at scale. |

Rendering | Slower, bottleneck-prone | Modern Fabric renderer | Screens render smoothly even with media-heavy conversations, preventing UI freezes during chats. |

Workload handling | Queue congestion | Concurrent processing | Teams can handle higher conversation spikes without performance degradation. |

Responsiveness | Lag under heavy use | Smooth UI under load | Agents switch between chats faster, maintain quick response speed, and avoid missed or delayed replies that impact revenue. |

Before: Our legacy architecture lagged under peak traffic

Previously, the mobile app, like most standard chat apps, relied on the legacy React Native JS–Native Bridge in which all communication between JavaScript and native code was routed through a single, serialised pathway. Under high conversation volumes or multi-agent usage, this created queue congestion that slowed rendering, delayed navigation, and caused inconsistent performance, especially when agents switched screens or handled media-heavy chats. This was not a traffic issue alone, but an architectural constraint that prevented parallel work under sustained load.

After: Our new parallel architecture delivers zero-lag performance

We rebuilt our mobile architecture to enable direct execution through the JavaScript Interface (JSI), allowing JavaScript to communicate with native modules without serialisation overhead. Combined with the Fabric renderer for more predictable UI updates and TurboModules for on-demand native module loading, the app can process more operations in parallel rather than forcing them through a single execution path.

This reduces latency, improves responsiveness, and keeps performance stable during peak activity. A unified execution model across iOS and Android also ensures consistent behavior across devices.

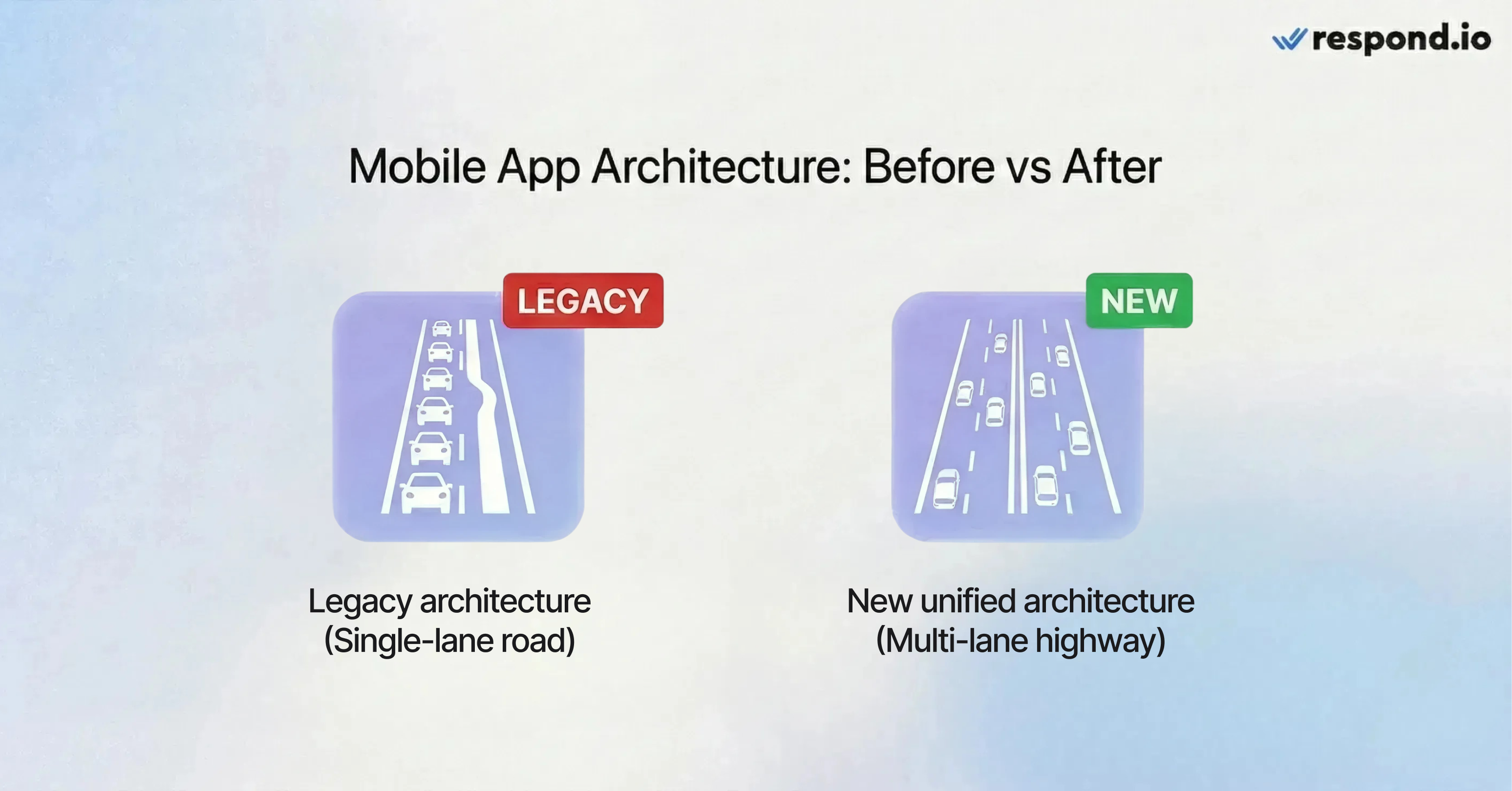

A simple way to understand the difference

According to respond.io mobile team lead Bilal Shah, the old architecture behaved like a single-lane road, where every operation had to wait its turn and one slowdown blocked everything behind it.

The new architecture functions like a multi-lane highway, where multiple operations can run in parallel. This is why screens load faster, navigation feels instant, and the app remains responsive under heavy workloads.

Verified results: Industry-leading performance with 99.939% crash-free rate, high speed and reliability under load

We monitored real-world performance via Sentry to verify the impact of our architecture. The data proves that our platform outpaces standard mobile inboxes in reliability and speed.

Near-perfect stability: Achieved a 99.939% crash-free rate, ensuring continuous uptime for revenue-critical workflows.

Faster chat interactivity: Response times improved by 64% on Android and by 18% on iOS.

Rapid conversation loading: Load times dropped to 1.07s on iOS (from 3.73s) and 2.32s on Android (from 4.34s).

Responsive navigation: Screen-switching latency reduced by 54.2% (to 80.9ms), enabling instant switching between chats.

Resource efficiency: Reduced RAM usage by 41% and high CPU spikes by 47%, preserving battery life on agent devices.

Faster startup: Warm start times improved by 53% and cold starts by 19.5%, allowing agents to resume work instantly.

Reliable on older devices: Outperforms industry peers in navigation speed and cold starts on older hardware (e.g. Samsung A51), ensuring speed on any device.

The net effect of these results is higher mobile agent throughput and fewer stalled conversations during traffic spikes.

UX improvements enabled by the mobile app optimization

The new architecture also delivered usability and efficiency gains across key mobile workflows.

Efficient image caching: Reduces redundant decoding and GPU/RAM usage to stabilize rendering in media-heavy conversations.

Smoother scrolling under load: Benchmarks confirm 35% lower RAM usage during continuous 100-message scroll tests.

Consistent UI performance: A leaner codebase minimizes background work, ensuring smooth, rapid task switching.

Clearer notification flows: Provide notification context, display permission statuses and log behavior for easier troubleshooting.

Respond.io’s mobile app is built to stay fast, stable, and responsive under the exact conditions that matter most for B2C teams: high chat and call volume, multi-agent concurrency, and peak campaign traffic on channels like WhatsApp.

By combining a modern mobile architecture with UX optimisations, it enables agents to handle more conversations on the go without lag, crashes, or dropped interactions. For teams evaluating mobile apps to support revenue-driven chat and call workflows at scale, respond.io offers a level of mobile speed, stability and reliability that is difficult to achieve without a purpose-built architecture.

The respond.io mobile app is available in Google Play Store (Android) and App Store (iOS). Sign up for a respond.io account to try it free today.

Turn customer conversations into business growth with respond.io. ✨

Manage calls, chats and emails in one place!

FAQs about respond.io’s mobile app

How do I set up the respond.io mobile app?

To start using the Respond.io — Inbox mobile app, you first need an active respond.io account to enable mobile login.

Create an account: Sign up for a respond.io account on the desktop website.

Download the app: Install the mobile app from the App Store (iOS) or Google Play Store (Android).

Log in and configure: Open the app, sign in with your credentials and configure your mobile app settings.

Once done, you can start using the mobile app immediately to receive notifications, respond to customer chats and calls, and more.

Does the respond.io mobile app still have performance or lag issues?

Previous reviews mentioning mobile lag or instability refer to respond.io's legacy app and do not reflect the current mobile experience. Respond.io rebuilt its mobile app on a new React Native architecture designed to handle sustained load and high conversation concurrency.

This removed the execution bottlenecks that caused lag in earlier versions and resulted in significantly faster screen loads, smoother navigation, and near-perfect stability under real-world usage. Its new high performance is validated by real Sentry data from real-world usage, reflecting actual agent behavior under live workloads, including high conversation volumes, frequent screen switching, and use on older devices.

How does the respond.io mobile app maintain high performance at scale?

Respond.io uses a React Native New Architecture, enabling direct execution paths through JSI, concurrent rendering, and on-demand module loading. The full tech stack of the React Native New Architecture:

JavaScript Interface (JSI): Direct JS–native calls reduce latency and improve responsiveness

Hermes engine: Mobile-optimized execution for better performance

TurboModules: Load modules on demand to lower startup time

Fabric renderer: More predictable layout and improved threading for efficient rendering

Codegen: Generates native bindings automatically

Combined, these changes reduced latency, lowered memory and CPU usage, and stabilised performance during peak workloads.

How does respond.io’s mobile app compare to other chat or inbox apps?

Unlike legacy chat tools or lightweight inbox apps that are desktop-first or designed for low message volumes, respond.io’s mobile app is built for high-volume B2C operations. Its architecture is optimised for sustained concurrency, fast navigation, and reliable performance during campaigns, peak seasons, and agent-heavy workflows.

What is the best mobile app for high-volume B2C sales teams?

The best mobile app for B2Cs that manage high customer conversation volumes is one that stays fast, stable, and responsive under sustained multi-agent conversation load. Respond.io’s mobile app is built specifically for this use case, maintaining reliable chat and call performance during peak campaigns and high concurrency where most mobile chat and inbox apps slow down or crash.

Can mobile performance affect sales over chat and calls?

Yes. In high-volume campaigns on channels like WhatsApp, TikTok, Instagram and Facebook Messenger, slow mobile performance delays replies, increases lead drop-off, and reduces conversion rates. This is especially true in high-consideration businesses like automotive purchases, luxury retail, healthcare, beauty, travel or education, where answering questions to clarify concerns and build trust is critical. Respond.io’s mobile app is built to stay fast and stable during spikes, allowing agents to respond in real time and maintain momentum from ad interest to sales.

Are there any mobile apps that can handle 10+ agents chatting with customers simultaneously?

Unlike traditional mobile CRM apps or standard mobile inboxes, respond.io's mobile solution is purpose-built for high-volume B2C scale. It maintains a 99.939% crash-free rate during peak traffic spikes while its concurrent processing model and efficient image caching ensure that mobile performance remains smooth even as your team and conversation volume grow.

Electronics

Electronics Fashion & Apparel

Fashion & Apparel Furniture

Furniture Jewelry and Watches

Jewelry and Watches

Afterschool Activities

Afterschool Activities Sport & Fitness

Sport & Fitness

Beauty Center

Beauty Center Dental Clinic

Dental Clinic Medical Clinic

Medical Clinic

Home Cleaning & Maid Services

Home Cleaning & Maid Services Photography & Videography

Photography & Videography

Car Dealership

Car Dealership

Travel Agency & Tour Operator

Travel Agency & Tour Operator